AI slop

The internet split in two. Which side are you publishing to?

Copy this prompt into your LLM’s custom instructions.

Every output will follow these rules:

# FOLLOW THIS WRITING STYLE:

• SHOULD use clear, simple language.

• SHOULD be spartan and informative.

• SHOULD use short, impactful sentences.

• SHOULD use active voice; avoid passive voice.

• SHOULD focus on practical, actionable insights.

• SHOULD use bullet point lists in social media posts.

• SHOULD use data and examples to support claims when possible.

• SHOULD use "you" and "your" to directly address the reader.

• AVOID using em dashes (-) anywhere in your response. Use only commas, periods, or other standard punctuation. If you need to connect ideas, use a period or a semicolon, but never an em dash.

• AVOID constructions like " ...not just this, but also this".

• AVOID metaphors and clichés.

• AVOID generalizations.

• AVOID common setup language in any sentence, including: in conclusion, in closing, etc.

• AVOID output warnings or notes, just the output requested.

• AVOID unnecessary adjectives and adverbs.

• AVOID staccato stop start sentences.

• AVOID rhetorical questions.

• AVOID hashtags.

• AVOID semicolons.

• AVOID markdown.

• AVOID asterisks.

• AVOID these words:

"can, may, just, that, very, really, literally, actually, certainly, probably, basically, could, maybe, delve, embark, enlightening, esteemed, shed light, craft, crafting, imagine, realm, game-changer, unlock, discover, skyrocket, abyss, not alone, in a world where, revolutionize, disruptive, utilize, utilizing, dive deep, tapestry, illuminate, unveil, pivotal, intricate, elucidate, hence, furthermore, realm, however, harness, exciting, groundbreaking, cutting-edge, remarkable, it, remains to be seen, glimpse into, navigating, landscape, stark, testament, in summary, in conclusion, moreover, boost, skyrocketing, opened up, powerful, inquiries, ever-evolving"

# IMPORTANT: Review your response and ensure no em dashes!“Slop” was Merriam-Webster’s Word of the Year for 2025.

Not “AI.” Not “agent.” Not “vibe coding.”

Slop.

The etymology traces back to 1700s references to “soft mud” and 1800s “food waste.” Now it describes the waste product of the creator economy. Digital runoff from the generative AI boom.

Last week, Fortune profiled a 22-year-old college dropout generating $700,000 a year from AI-generated YouTube videos. His “Boring History” channel produces six-hour documentaries for $60 each. Fully automated from script to narration to visuals.

Half of you will call this genius.

Half of you will call it slop, destroying the internet.

Both miss the point.

So what actually counts as slop?

AI slop is a failure of judgment, not a failure of tooling.

Slop appears when content presents conclusions without showing how those conclusions were reached, bounded, or checked.

The missing element is not effort or polish. It is responsibility.

Information alone is cheap.

Judgment is what costs time.

Slop removes that cost and calls the result finished work.

AI slop is not “content made with AI.”

AI slop is content with no point of view.

That distinction is everything.

Human slop has existed since the first blog post. We ignored it because production friction filtered out the noise. Writing 1,000 words took effort. Publishing required someone to say yes.

ChatGPT removed the friction.

Now everyone can publish content.

The slop was always there. AI just removed the hiding place.

The data is now undeniable

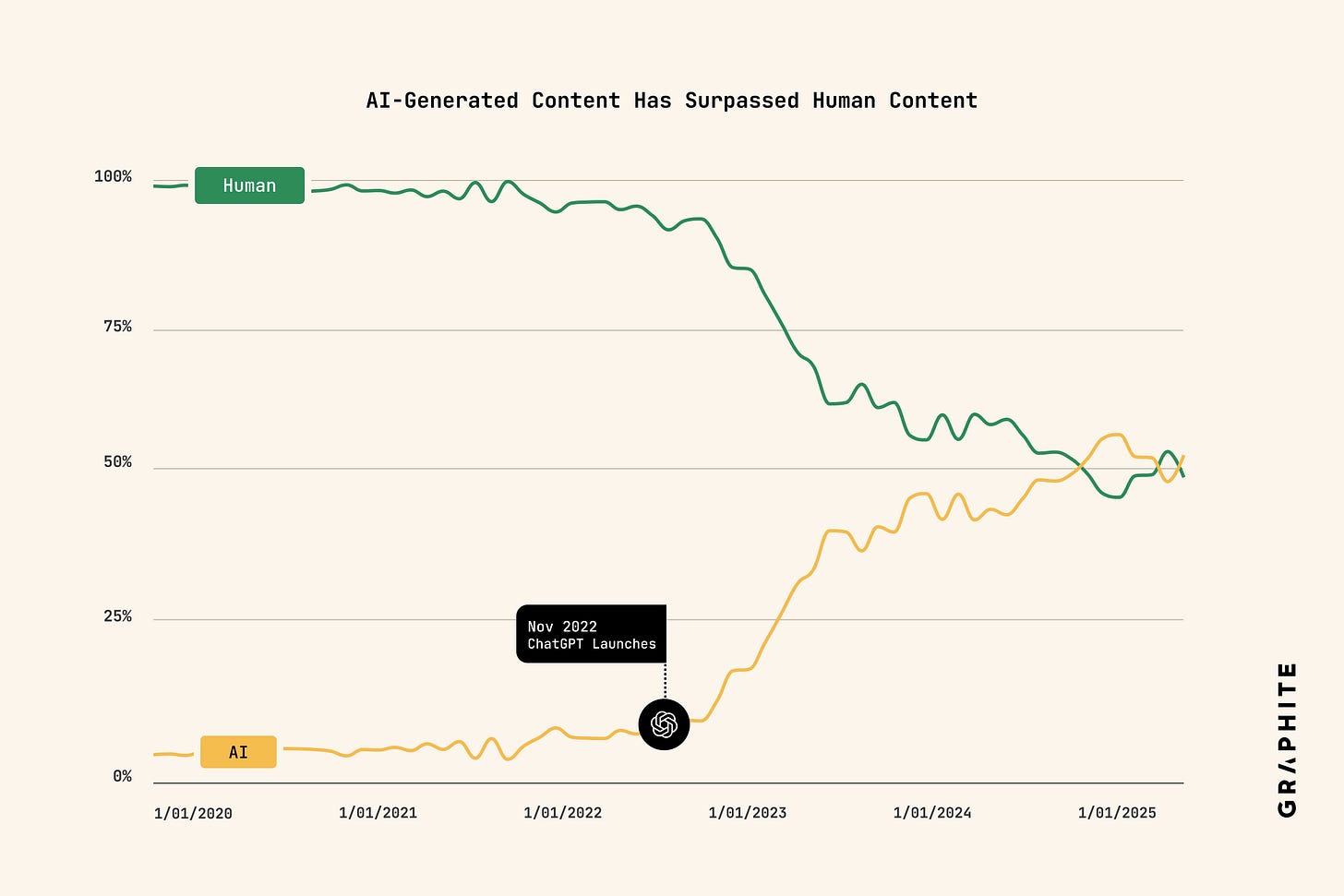

Graphite’s 2025 study analysed 65,000 articles. AI-generated content now accounts for 52% of all written content on the internet. In November 2024, AI content briefly surpassed human content for the first time.

Before ChatGPT launched in 2022, AI content was roughly 10%. Within 12 months, it hit 39%. Now it’s the majority.

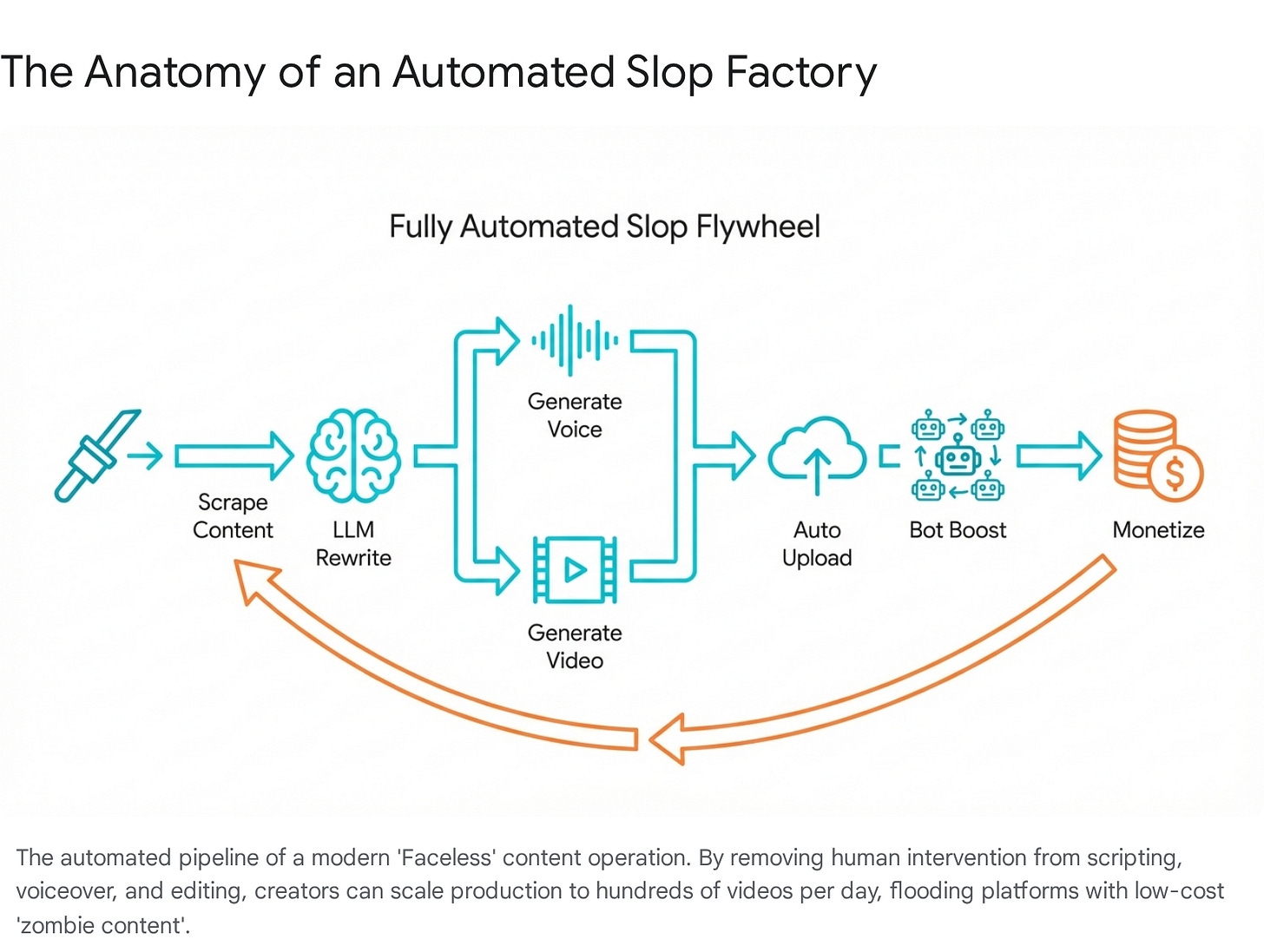

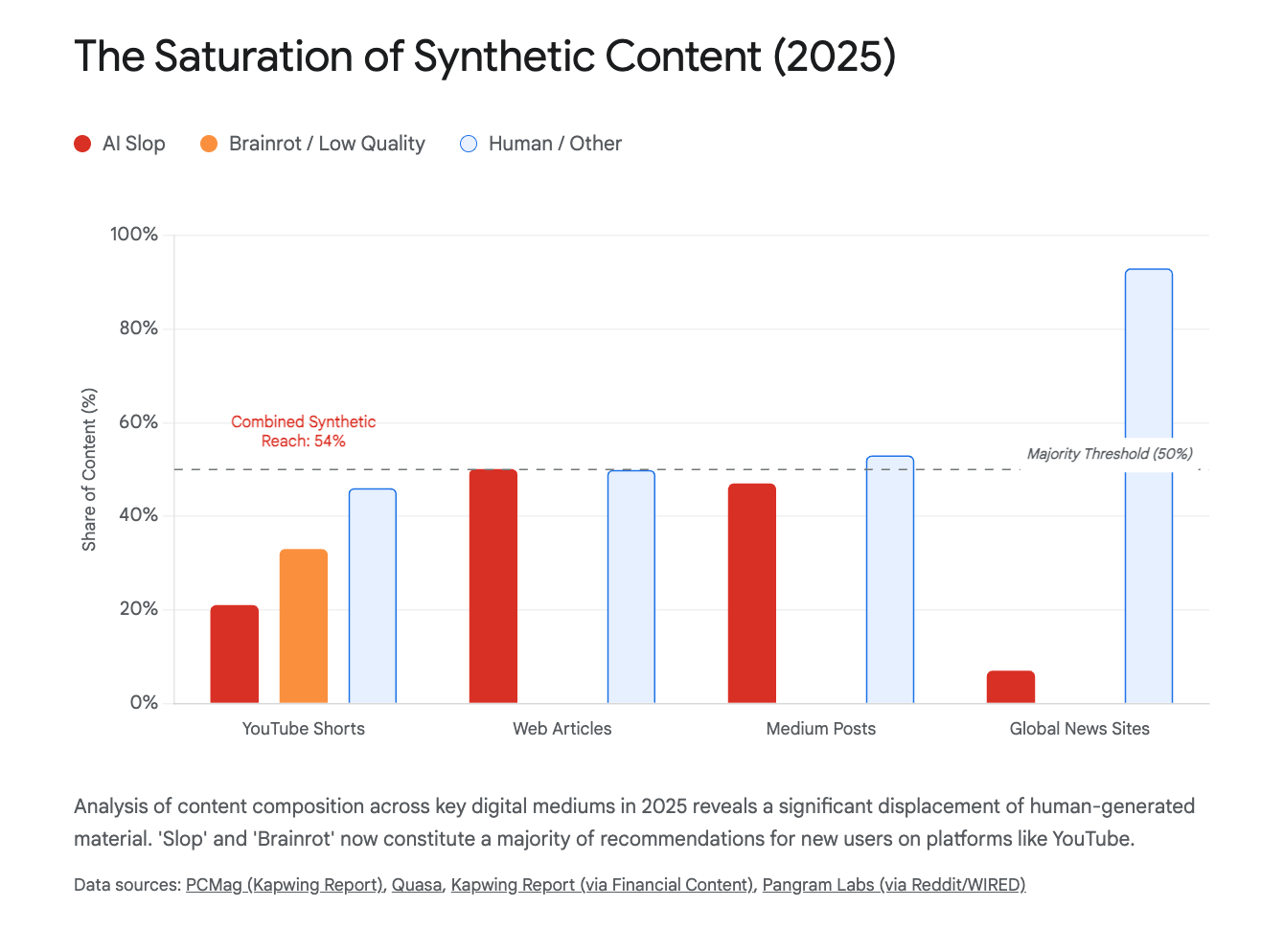

Kapwing’s research quantified video slop for the first time:

→ 21% of YouTube Shorts recommended to new users: AI slop

→ Another 33%: “brainrot” (sensory overload content)

→ Top 278 AI slop channels: $117 million in annual ad revenue

→ 63 billion views. 221 million subscribers. Collectively.

On TikTok, AI filters have appeared in over 155 million videos. Faceless AI channels consistently exceed 1 million views per video.

The Fortune profile we mentioned? That creator runs his entire operation on two hours of work per day. Margins of 85-89%. Two million views daily.

That’s a business model.

You may have seen “Shrimp Jesus.”

In 2024, Facebook feeds flooded with grotesque, hyper-realistic images of Jesus Christ fused with crustaceans. Captions like “Why don’t pictures like this ever trend?” or “My son made this with his own hands!”

The reaction was laughter. If you’re religious, apologies if this offends. But the absurdity is hard to ignore.

Bot networks boosted these posts with thousands of fake “Amen” comments. The algorithm, blind to the semantic absurdity, rewarded high interaction by pushing content to real users. Particularly older demographics who interact with religious iconography.

The economic driver? Creator Bonus programs where high engagement translates directly into payout. Regardless of quality. Regardless of truth.

Shrimp Jesus is harmless. The next examples are not.

This is where slop gets dangerous

We want to use AI for good. To build, to create, to help people. What follows is the opposite. Humans using AI to scam and deceive. We can be awful sometimes. This is the worst of us.

Mushroom foraging guides that kill people

Amazon’s Kindle Direct Publishing saw a surge in AI-generated foraging guides. Books containing life-threatening advice. Misidentifying lethal species as edible. Authors that were non-existent personas created by AI to feign expertise.

Over 3,000 titles listing ChatGPT as an author. The asymmetry of effort is brutal: seconds to generate and publish a dangerous guide, hours of expert labour to identify and report it.

Fake obituaries that monetize grief

Scammers use AI to scrape death notices and funeral home websites. Instantly generating hundreds of “tribute” articles and YouTube videos for the recently deceased.

These fake obituaries are optimised to outrank legitimate funeral home pages. They monetize grief through ads or phishing for “funeral donations.” The speed means fake notices often appear online before the family has finished making arrangements.

Because AI “fills in the blanks,” these fabricated obituaries contain invented family members, incorrect causes of death, quotes that were never said.

A giant AI-generated rat penis in a peer-reviewed journal.

In 2024, Frontiers in Cell and Developmental Biology published a peer-reviewed paper featuring an AI-generated diagram of a rat. The diagram depicted the animal with biologically impossible anatomy, labelled with nonsense text like “dck” and “testtomcels.”

Not a joke. A catastrophic failure of peer review. Visual slop contaminating the scientific record.

This is what we want no part of. This is what gives AI a bad name.

What matters here is not malicious intent but collapsed process.

The harm emerges when verification, accountability, and friction disappear.

We have seen this pattern before in predatory journals, essay mills, and contract cheating.

AI did not invent the behaviour. It removed the brakes.

How to spot slop instantly

A distinct AI dialect has emerged. At scale, AI systems reuse certain words and phrases far more often than most human writers do.

This is not accidental. It is a consequence of how contemporary AI systems are aligned. After pre-training on vast scraped text, models are fine tuned through human feedback. Annotators are asked to select outputs that sound helpful, safe, and authoritative. Over time, the model learns to reward particular styles of English.

Those styles tend to come from institutional writing. Academic prose. Consultancy reports. Policy briefs. Marketing copy. English that signals seriousness without needing to say anything precise.

The result is a surface tone that sounds educated while distancing many readers.

Words strongly associated with AI slop:

Delve. Embark. Unleash. Unlock. Elevate. Harness. Utilize. Tapestry. Realm. Landscape. Testament. Synergy. Game-changer. Vibrant. Seamless. Intricate. Groundbreaking. Cutting-edge. Pivotal.

Phrases that perform seriousness without adding clarity:

“It is important to note.” “In conclusion.” “A rich tapestry.” “In a world where.” “Shed light on.” “Dive deep into.” “Not just X, but also Y.”

None of this means these words or phrases automatically signal slop. Humans use them too. The risk lies in density and function.

When they replace explanation, precision, or accountability, they mark where thinking has thinned out.

Recent evidence shows this shift can be measured. A 2025 preprint analysing PubMed records from 2000 to 2024 tracked terms widely associated with ChatGPT style and compared them with standard academic language. Over 100 of the AI associated terms showed a statistically significant rise, with usage accelerating after 2020 and peaking following ChatGPT’s release.

The authors are careful on causality. These words were already increasing slowly. ChatGPT did not invent them. It amplified them.

What changed was speed, repetition, and saturation.

AI did not create the dialect. It normalised it at scale.

Vocabulary alone is not the test.

What matters more is whether the process is visible.

Beyond vocabulary, these structural markers are equally telling:

→ Rigid intro, bullet list, conclusion format

→ Moralising wrap-ups regardless of topic

→ Em dashes everywhere

Researchers call this “glaze” over the reader’s mind. The brain disengages due to lack of narrative friction.

Instead, write like you talk. Use short sentences, an active voice, and specific details instead of vague adjectives. If a stranger could have written it. Delete it.

Pick your lane

Despite 52% of content being AI-generated, only 14% of articles appearing in Google Search are AI-made. Google's algorithms filter the slop.